Data breaches have become a part of life. They impact hospitals, universities, government agencies, charitable organizations and commercial enterprises. In healthcare alone, 2020 saw 640 breaches, exposing 30 million personal records, a 25% increase over 2019 that equates to roughly two breaches per day, according to the U.S. Department of Health and Human Services. On a global basis, 2.3 billion records were breached in February 2021.

It’s painfully clear that existing data loss prevention (DLP) tools are struggling to deal with the data sprawl, ubiquitous cloud services, device diversity and human behaviors that constitute our virtual world.

Conventional DLP solutions are built on a castle-and-moat framework in which data centers and cloud platforms are the castles holding sensitive data. They’re surrounded by networks, endpoint devices and human beings that serve as moats, defining the defensive security perimeters of every organization. Conventional solutions assign sensitivity ratings to individual data assets and monitor these perimeters to detect the unauthorized movement of sensitive data.

Unfortunately, these historical security boundaries are becoming increasingly ambiguous and somewhat irrelevant as bots, APIs and collaboration tools become the primary conduits for sharing and exchanging data.

In reality, data loss is only half the problem confronting a modern enterprise. Corporations are routinely exposed to financial, legal and ethical risks associated with the mishandling or misuse of sensitive information within the corporation itself. The risks associated with the misuse of personally identifiable information have been widely publicized.

However, risks of similar or greater severity can result from the mishandling of intellectual property, material nonpublic information, or any type of data that was obtained through a formal agreement that placed explicit restrictions on its use.

Conventional DLP frameworks are incapable of addressing these challenges. We believe they need to be replaced by a new data misuse protection (DMP) framework that safeguards data from unauthorized or inappropriate use within a corporate environment in addition to its outright theft or inadvertent loss. DMP solutions will provide data assets with more sophisticated self-defense mechanisms instead of relying on the surveillance of traditional security perimeters.

Tech and VC heavyweights join the Disrupt 2025 agenda

Netflix, ElevenLabs, Wayve, Sequoia Capital — just a few of the heavy hitters joining the Disrupt 2025 agenda. They’re here to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss the 20th anniversary of TechCrunch Disrupt, and a chance to learn from the top voices in tech — grab your ticket now and save up to $675 before prices rise.

Tech and VC heavyweights join the Disrupt 2025 agenda

Netflix, ElevenLabs, Wayve, Sequoia Capital — just a few of the heavy hitters joining the Disrupt 2025 agenda. They’re here to deliver the insights that fuel startup growth and sharpen your edge. Don’t miss the 20th anniversary of TechCrunch Disrupt, and a chance to learn from the top voices in tech — grab your ticket now and save up to $675 before prices rise.

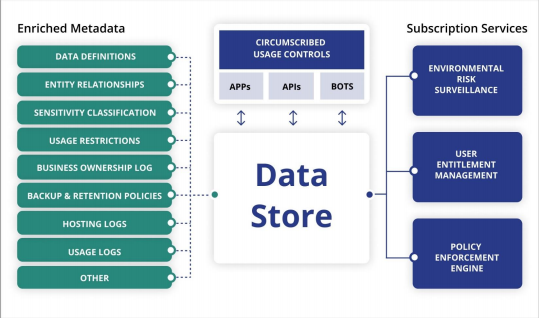

It’s a subtle but significant distinction. Instead of applying tags and policies to data assets, the assets themselves should “own” a rich set of metadata characteristics and subscribe to services that protect their integrity and control their usage. New approaches to managing data asset lineage, hosting environment vulnerabilities, user entitlements and policy enforcement are emerging as foundational pillars of these next-generation DMP solutions.

Critical data assets should possess a comprehensive understanding of their genetic family tree. Primary assets should contain metadata describing how, when, why and where they were originally constructed. This information should be inherited by all derivative assets. Proper designation and consistent propagation of security classifications and usage restrictions can eliminate many of the brute-force scanning and tagging processes that are routinely used today to manage sensitive data.

Cyberhaven has responded to this challenge by devising a tracing solution that can retroactively determine the lineage of data files. Secure Circle provides a means to ensure that data files transferred to laptops and smartphones inherit the conditional access rules that were established for their source systems. Manta has adopted a wholly different approach: It establishes lineage relationships by continuously scanning the software algorithms being used to construct derivative assets.

More recently, we’ve seen the emergence of policy-as-code vendors like Stacklet, Accurics, Bridgecrew and Concourse Labs. These platforms will help enterprises populate extended metadata schema at the time applications are created and ensure a level of schema consistency and coverage that hasn’t been achieved in the past.

There are also new tools that can provide assets with continuous information regarding the security of their current hosting environment. Kenna Security merges data from existing security tools with global threat intelligence to rate the vulnerability of individual infrastructure elements within an asset’s hosting environment. Traceable monitors end-user behaviors, API interactions, data movements and code execution to identify potential indicators of data mishandling within and between applications.

People — not applications, networks or endpoints — have become the primary security perimeter in today’s cloud-first, choose-the-handiest-device, collaboration-obsessed world. The retention surface surrounding individual data assets is simply the sum of the access permissions and authorization privileges that have been granted to data users.

In simple English, it’s the collection of who can access an asset and what they can do after access has been obtained. Authomize employs an analytics engine to inventory existing user privileges and suggest steps to curb privilege escalation. Okera enables entitlement rights to be managed on an asset-specific basis by data stewards distributed across multiple business functions. And companies such as CloudKnox, Ermetic and Sonrai Security provide the ability to manage human and machine privileges within public cloud platforms.

In a next-generation DMP framework, individual data stores will possess self-defense mechanisms that rely upon enriched metadata, subscriptions to specialized services and the use of customized applications, APIs and bots to regulate a wide variety of usage scenarios.

Many security tools employ automated procedures to enforce data-usage policies. Automation accelerates responses to security events while reducing the workload on security teams. While well-intended in principle, distributed policy enforcement across multiple tools can frequently result in confusion and inconsistent results. Policy sprawl can undermine the effectiveness of any security framework.

This issue can be overcome if individual data assets can subscribe to policy enforcement engines that are uniquely configured for their protection. Abstracting different aspects of policy administration into one or more freestanding brokerage services is difficult to achieve in practice, because the business rules embedded in existing tools are rarely exposed through readable APIs.

This complicates efforts to discover, normalize and orchestrate predetermined procedures. Construction of configurable policy brokerage services that can orchestrate asset-specific responses to different usage scenarios is an aspirational goal that merits immediate attention from both entrepreneurs and VCs.

Human interactions with sensitive data can be further regulated through expanded use of customized low-code applications, RPA software bots and data service APIs. These automation technologies can be used to restrict the ways in which data is sourced, transformed and shared. They’re effective control mechanisms when used intentionally to prevent misuse.

The transition from DLP to DMP will be a journey, not an event. New tools and capabilities based upon these principles will emerge in a piecemeal fashion. Progressive security teams will initially use these new capabilities to augment their current practices and ultimately replace legacy DLP solutions altogether.

The lack of a comprehensive DMP tech stack should not be used as an excuse to delay a wholesale reimaging of data security as a DMP problem, not a DLP issue. Several of the concepts referenced above, such as metadata enrichment, hosting environment surveillance, end-user entitlement management, and the use of automation tools to regulate human-data interactions, can be implemented today using cloud-based services.

Proactive implementation of these practices now will enable progressive security teams to obtain immediate benefits from new DMP capabilities that are likely to emerge in the near future.

Disclosure: Sid Trivedi is a board member of Stacklet and CloudKnox; they are Foundation Capital investments. Mark Settle is an advisor to Authomize.