Thinking About AI: Part 2 - Structural Risks

Yesterday I wrote a post on where we are with artificial intelligence by providing some history and foundational ideas around neural network size. Today I want to start in on risks from artificial intelligence. These fall broadly into two categories: existential and structural. Existential risk is about AI wiping out most or all of humanity. Structural risk is about AI aggravating existing problems, such as wealth and power inequality in the world. Today’s post is about structural risks.

Structural risks of AI have been with us for quite some time. A great example of these is the Youtube recommendation algorithm. The algorithm, as far as we know, optimizes for engagement because Youtube’s primary monetization are ads. This means the algorithm is more likely to surface videos that have an emotional hook than ones that require the viewer to think. It will also pick content that emphasizes the same point of view, instead of surfacing opposing views. And finally it will tend to recommend videos that have already demonstrated engagement over those that have not, giving rise to a “rich getting richer” effect in influence.

With the current progress it may look at first like these structural risks will just explode. Start using models everywhere and wind up having bias risk, “rich get richer” risk, wrong objective function risk, etc. everywhere. This is a completely legitimate concern and I don’t want to dismiss it.

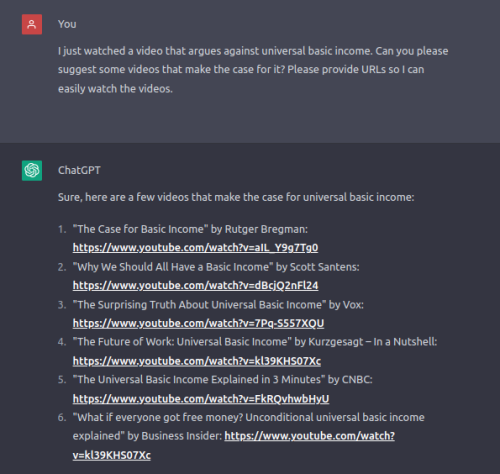

On the other hand there are also new opportunities that come from potentially giving broad access to models and thus empowering individuals. For example, I tried the following prompt in Chat GPT “I just watched a video that argues against universal basic income. Can you please suggest some videos that make the case for it? Please provide URLs so I can easily watch the videos.” and it quickly produced a list videos for me to watch. Because so much content has been ingested, users can now have their own “Opposing View Provider” (something I had suggested years ago).

There are many other ways in which these models can empower individuals, for example summarizing text at a level that might be more accessible. Or pointing somebody in the right direction when they have encountered a problem. And here we immediately run into some interesting regulatory challenges. For example: I am quite certain that Chat GPT could give pretty good free legal advice. But that would be running afoul of the regulations on practicing law. So part of the structural risk issue is that our existing regulations predate any such artificial intelligence and will oddly contribute to making its power available to a smaller group (imagine more profitable law firms instead of widely available legal advice).

There is a strong interaction here also between how many such models will exist (from a small oligopoly to potentially a great many) and to what extent endusers can embed these capabilities programmatically or have to use them manually. To continue my earlier example, if I have to head of Chat GPT every time I want to ask for an opposing view I will be less likely to do so than if I could script the sites I use so that an intelligent agent can represent me in my interactions. This is of course one of the core suggestions I make in my book The World After Capital in a section titled “Bots for All of Us.”

I am sympathetic to those who point to structural risks as a reason to slow down the development of these new AI systems. But I believe that for addressing structural risks the better answer is to make sure that there are many AIs, that they can be controlled by endusers, that we have programmatic access to these and other systems, etc. Put differently structural risks are best addressed by having more artificial intelligence with broader access.

We should still think about other regulation to address structural risks but much of what has been proposed here doesn’t make a ton of sense. For example, publishing an algorithm isn’t that helpful if you don’t also publish all the data running through it. In the case of a neural network alternatively you could require publishing the network structure and weights but that would be tantamount to open sourcing the entire model as now anyone could replicate it. So for now I believe the focus of regulation should be avoiding a situation where there are just a few huge models that have a ton of market power.

Some will object right here that this would dramatically aggravate the existential risk question, but I will make an argument in my next post why that may not be the case.

Posted: 26th March 2023 – Comments

Tags:

artificial intelligence ai